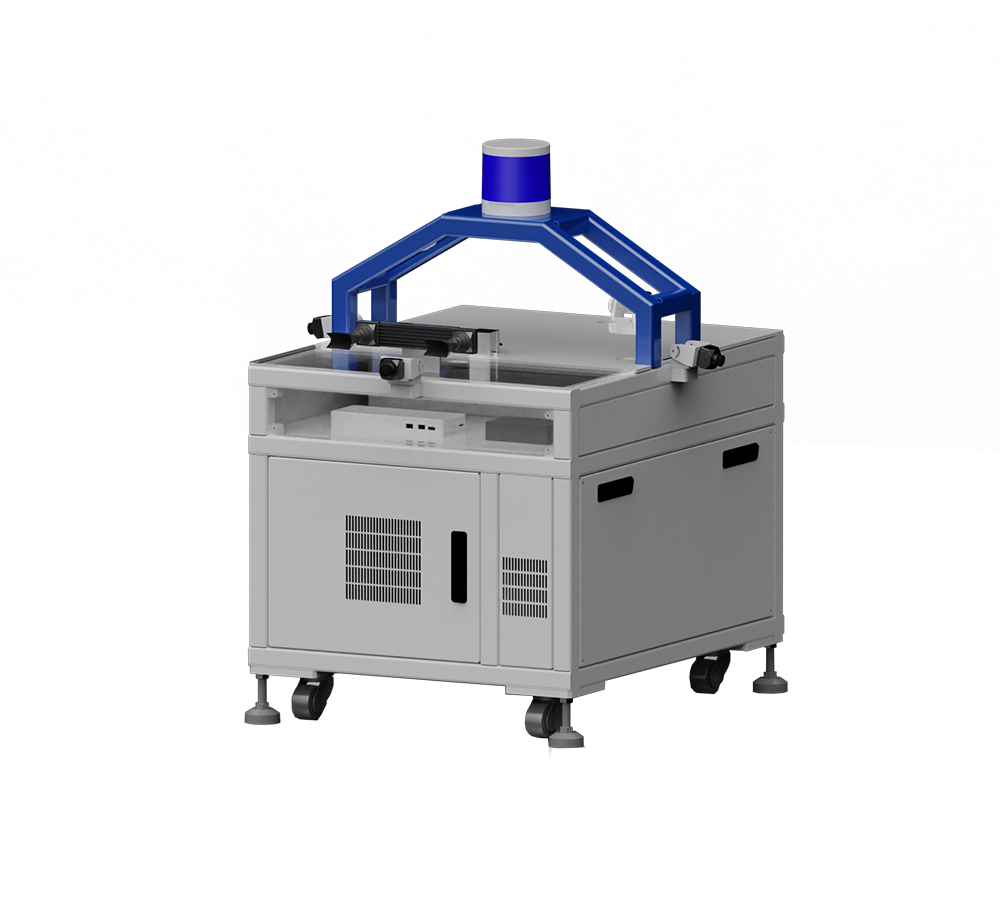

ALG-TECH introduces the Vision-Radar Multimodal Perception System, which integrates the spatial modeling capabilities of binocular stereo vision with the high-penetration detection advantages of radar to build real-time multimodal environmental perception capabilities. Powered by a deep learning algorithm engine, it utilizes neural networks to achieve high-precision visual relocalization, obstacle detection, map building, and other functions, enabling autonomous positioning without reliance on GPS. This system is applicable to intelligent rail transit, auxiliary safety, and navigation systems for mobile unmanned vehicles.

- PRODUCTS

-

INDUSTRY APPLICATIONS

AUTONOMOUS DRIVING Autonomous Driving Data Collection Solution Autonomous Driving Synchronized Timing Solution Hardware-in-the-Loop Injection Testing Solution Advanced autonomous driving vehicle camera Low-Speed Autonomous Vehicle Full-Range Perception SolutionINTELLIGENT ROBOTICS Embodied Intelligence Binocular Stereo Perception Solution Collaborative Robot Visual Perception Solution AGV & AMR Visual Perception Solution Lawn Mower Visual Perception SolutionINDUSTRIAL VISION Industrial Inspection/Barcode Scanning Vision Solution Industrial Security & Surveillance Vision SolutionRAIL TRANSIT Rail Transit Train Visual Positioning Solution Vision-Radar Multi-Modal Perception System

- PLATFORM

- SERVICES

- NEWS

- ABOUT

-